How were these homes captured?

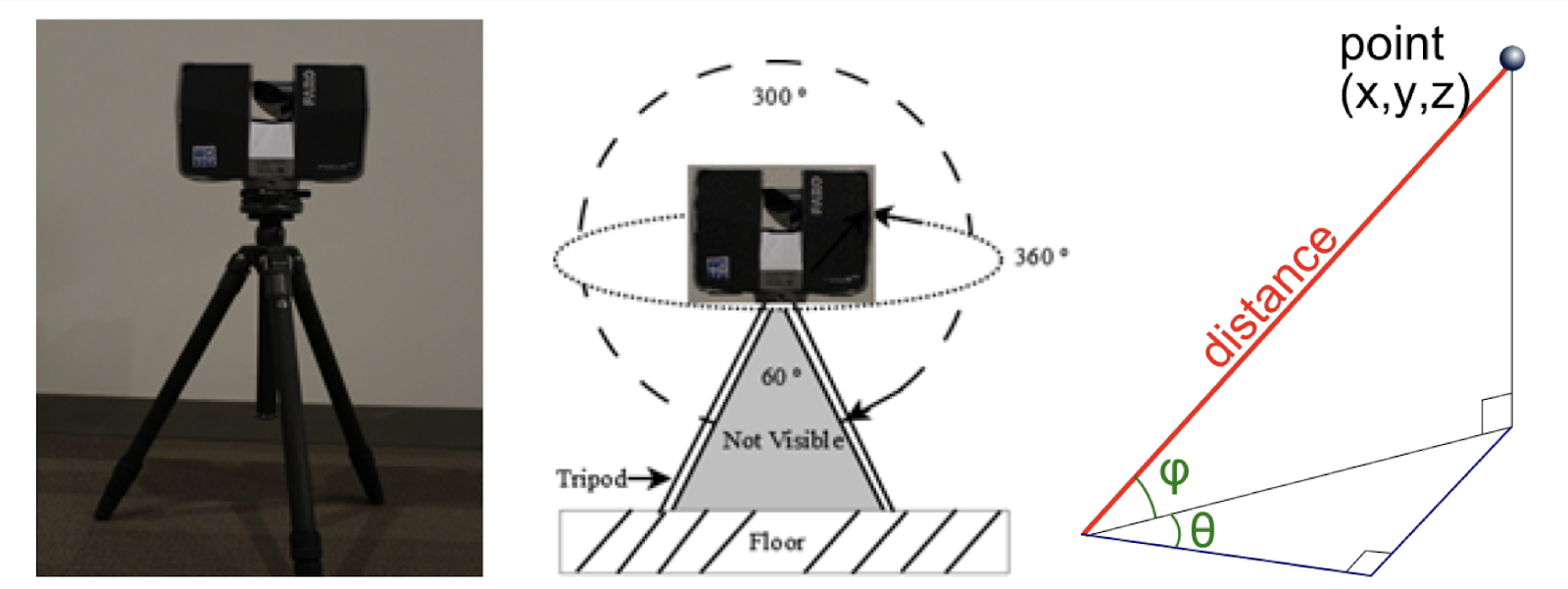

To capture the homes in this dataset we utilized a technology called LiDAR, which stands for Light Detection And Ranging.

At its most basic, the technology works by emitting a laser pulse and counting the amount of time it takes for the signal to return. The longer the time, the farther away a point in space is from the scanner. Our 3D scanner repeats this data collection spinning around in a circle, thereby capturing millions of data points a minute.

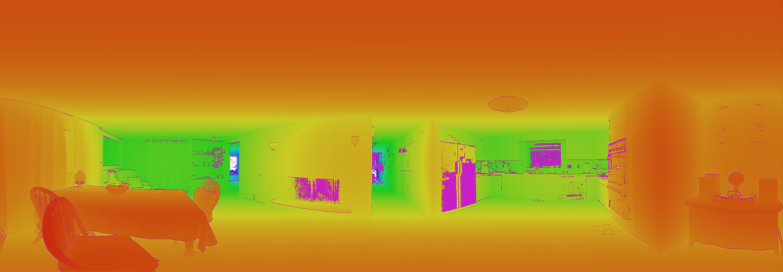

These millions of points of data are each represented as three numbers, indicating a three-dimensional point relative to the scanner. These numbers can be visualized as a depth heatmap, showing the raw depth information gathered.

As this information only gathers the geometry of the scene, the scanner also captures the environment through a 360 photograph. This provides color information for each point in space.

These two types of data are then combined together, such that each datapoint consists of the three dimensional point in space and a color (sometimes referred to as an XYZRGB file). These datasets are called pointclouds as they store information about points in space, but not connectivity information as in a mesh.

Unfortunately, this process is only able to give information that is line-of-sight, meaning that information is only gathered for what can be seen. For example, no information is gathered for what is behind a chair, inside a closed refrigerator, etc.

In order to fill in this information, the scanning process is repeated from several views of a scene, generating several pointclouds. These pointclouds are then combined together in a process called registration. While this process has the possibility to introduce error, new algorithms have reduced the resulting errors to be very small.

Once all of these scans are combined together a full model of each home can be analyzed and explored on 2D screens and in virtual reality.